I thought it might be helpful for people following my guide for installing macOS 12 Monterey on Proxmox if I described my setup and how I’m using macOS.

Proxmox hardware specs

- Motherboard: Asrock EP2C602

- RAM: 64GB

- CPU: 2 x Intel E5-2687W v2 for a total of 16 cores / 32 threads

- Storage

- ASUS Hyper M.2 X16 PCIe 4.0 X4 Expansion Card

- Samsung 970 Evo 1TB NVMe SSD for macOS

- Samsung 950 Pro 512GB NVMe SSD

- 38TB of spinning disks in various ZFS configurations

- 1TB SATA SSD for Proxmox’s root device

- ASUS Hyper M.2 X16 PCIe 4.0 X4 Expansion Card

- Graphics

- EVGA GeForce GTX 1060 6GB

- AMD Radeon R9 280X (HD 7970 / Tahiti XTL) (not currently installed)

- AMD Sapphire Radeon RX 580 Pulse 8GB (11265-05-20G)

- IO

- 2x onboard Intel C600 USB 2 controllers

- Inateck USB 3 PCIe card (Fresco Logic FL1100 chipset)

- 2x onboard Intel 82574L gigabit network ports

- Case

- Lian Li PC-X2000F full tower (sadly, long discontinued!)

- Lian Li EX-H35 HDD Hot Swap Module (to add 3 x 3.5″ drive bays into 3 of the 4 x 5.25″ drive mounts), with Lian Li BZ-503B filter door, and Lian Li BP3SATA hot swap backplane. Note that because of the sideways-mounted 5.25″ design on this case, the door will fit flush with the left side of the case, while the unfiltered exhaust fan sits some 5-10mm proud of the right side of the case.

- CPU cooler

- 2 x Noctua NH-U14S coolers

- Power

- EVGA SuperNOVA 750 G2 750W

My Proxmox machine is my desktop computer, so I pass most of this hardware straight through to the macOS Monterey VM that I use as my daily-driver machine. I pass through both USB 2 controllers, the USB 3 controller, an NVMe SSD, and one of the gigabit network ports, plus the RX 580 graphics card.

Attached to the USB controllers I pass through to macOS are a Bluetooth adapter, keyboard, Logitech trackball dongle, and sometimes a DragonFly Black USB DAC for audio (my host motherboard has no audio onboard).

Once macOS boots, this leaves no USB ports dedicated to the host, so no keyboard for the host! I normally manage my Proxmox host from a guest, or if all of my guests are down I use SSH from a laptop or smartphone instead (JuiceSSH on Android works nicely for running qm start 100 to boot up my macOS VM if I accidentally shut it down).

On High Sierra, I used to use the GTX 750 Ti, then later the GTX 1060, to drive two displays (one of them 4k@60Hz over DisplayPort) which both worked flawlessly. However NVIDIA drivers are not available for Monterey, so now I’m back with AMD.

My old AMD R9 280X had some support in Catalina, but I got flashing video corruption on parts of the screen intermittently, and it wasn’t stable on the host either, triggering DMAR warnings that suggest that it tries to read memory it doesn’t own. This all came to a head after upgrading 10.15.4, it just went to a black screen 75% of the way through boot and the system log shows the GPU driver crashed and didn’t return.

Now I’m using the Sapphire Radeon Pulse RX 580 8GB as suggested by Passthrough Post. This one is well supported by macOS, Apple even recommends it on their website. Newer GPUs than this, and siblings of this GPU, suffer from the AMD reset bug that makes them a pain in the ass to pass through. You can now use the vendor-reset module to fix some of these.

Despite good experiences reported by other users, I’m still getting reset-bug-like behaviour on some guest boots, which causes a hang at the 75% mark on the progress bar just as the graphics would be initialised (screen does not go to black). At the same time this is printed to dmesg:

pcieport 0000:00:02.0: AER: Uncorrected (Non-Fatal) error received: 0000:00:02.0

pcieport 0000:00:02.0: AER: PCIe Bus Error: severity=Uncorrected (Non-Fatal), type=Transaction Layer, (Requester ID)

pcieport 0000:00:02.0: AER: device [8086:3c04] error status/mask=00004000/00000000

pcieport 0000:00:02.0: AER: [14] CmpltTO (First)

pcieport 0000:00:02.0: AER: Device recovery successful

This eventually hangs the host. It looks like the host needs to be power-cycled between guest boots for the RX 580 to be fully reset.

On this motherboard is a third SATA controller, a Marvell SE9230, but enabling this in the ASRock UEFI setup causes it to throw a ton of DMAR errors and kill the host, so avoid using it.

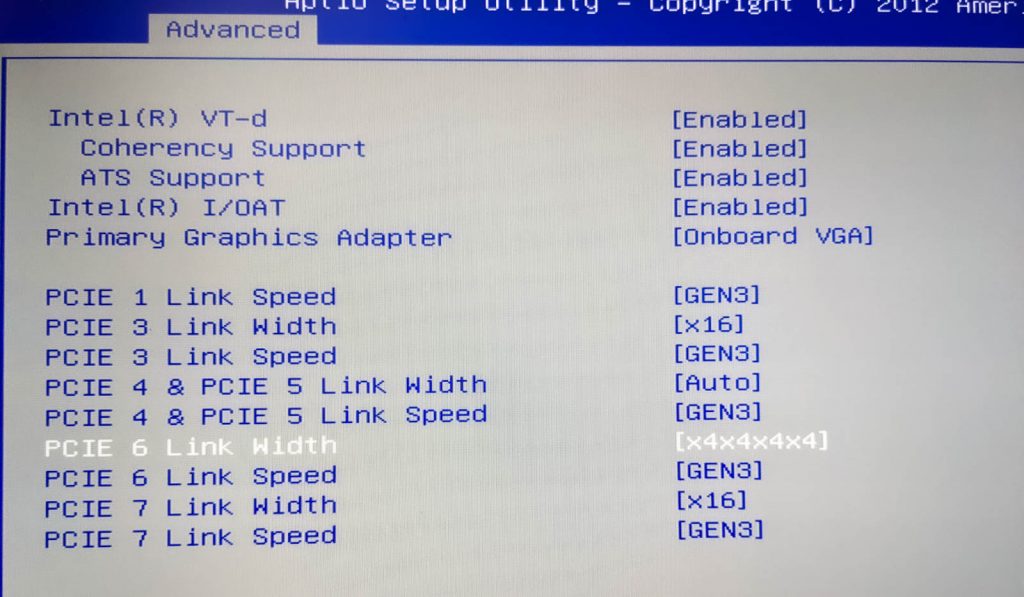

My ASUS Hyper M.2 X16 PCIe 4.0 X4 Expansion Card allows motherboards that support PCIe-slot bifurcation to add up to 4 NVMe SSDs to a single x16 slot, which is wonderful for expanding storage. Note that bifurcation support is an absolute requirement with this card, if your system doesn’t support it you need to use one that has a PCIe switch chip onboard instead.

What I use it for

I’m using my Monterey VM for developing software (IntelliJ / XCode), watching videos (YouTube / mpv), playing music, editing photos with Photoshop and Lightroom, editing video with DaVinci Resolve, buying apps on the App Store, syncing data with iCloud, and more. That all works trouble-free. I don’t use any of the Apple apps that are known to be troublesome on Hackintosh (iMessage etc), so I’m not sure if those are working or not.

VM configuration

Here’s my VM’s Proxmox configuration, with discussion to follow:

agent: 1 args: -device isa-applesmc,osk="..." -smbios type=2 cpu host,kvm=on,vendor=GenuineIntel,+kvm_pv_unhalt,+kvm_pv_eoi,+hypervisor,+invtsc,+pdpe1gb,check -smp 32,sockets=2,cores=8,threads=2 -device 'pcie-root-port,id=ich9-pcie-port-6,addr=10.1,x-speed=16,x-width=32,multifunction=on,bus=pcie.0,port=6,chassis=6' -device 'vfio-pci,host=0000:0a:00.0,id=hostpci5,bus=ich9-pcie-port-6,addr=0x0,x-pci-device-id=0x10f6,x-pci-sub-vendor-id=0x0000,x-pci-sub-device-id=0x0000' -global ICH9-LPC.acpi-pci-hotplug-with-bridge-support=off balloon: 0 bios: ovmf boot: order=hostpci4 cores: 16 cpu: Penryn efidisk0: vms:vm-100-disk-1,size=128K hostpci0: 03:00,pcie=1,x-vga=on hostpci1: 00:1a.0,pcie=1 hostpci2: 00:1d.0,pcie=1 hostpci3: 82:00.0,pcie=1 hostpci4: 81:00.0,pcie=1 # hostpci5: 0a:00.0,pcie=1 hugepages: 1024 machine: pc-q35-6.1 memory: 40960 name: Monterey net0: vmxnet3=2B:F9:52:54:FE:8A,bridge=vmbr0 numa: 1 onboot: 1 ostype: other scsihw: virtio-scsi-pci smbios1: uuid=42c28f01-4b4e-4ef8-97ac-80dea43c0bcb sockets: 2 tablet: 0 vga: none hookscript: local:snippets/hackintosh.sh

- agent

- Enabling the agent enables Proxmox’s “shutdown” button to ask macOS to perform an orderly shutdown.

- args

- OpenCore now allows “cpu” to be set to “host” to pass through all supported CPU features automatically. The OC config causes the CPU to masquerade as Penryn to macOS to keep macOS happy.

- I’m passing through all 32 of my host threads to macOS. Proxmox’s configuration format doesn’t natively support setting a thread count, so I had to add my topology manually here by adding “-smp 32,sockets=2,cores=8,threads=2”.

- For an explanation of all that “-device” stuff on the end, read the “net0” section below.

- hostpci0-5

- I’m passing through 6 PCIe devices, which is now natively supported by the latest version of Proxmox 6. From first to last I have my graphics card, two USB 2 controllers, my NVMe storage, a USB 3 controller, and one gigabit network card.

- hugepages

- I’ve enabled 1GB hugepages on Proxmox, so I’m asking for 1024MB hugepages here. More details on that further down.

- memory

- 40 gigabytes, baby!

- net0

- I usually have this emulated network card disabled in Monterey’s network settings, and use my passthrough Intel 82574L instead.

- Although Monterey has a driver for the Intel 82574L, the driver doesn’t match the exact PCI ID of the card I have, so the driver doesn’t load and the card remains inactive. Luckily we can edit the network card’s PCI ID to match what macOS is expecting. Here’s the card I’m using

-

# lspci -nn | grep 82574L 0b:00.0 Ethernet controller [0200]: Intel Corporation 82574L Gigabit Network Connection [8086:10d3]

My PCI ID here is 8086:10d3. If you check the file /System/Library/Extensions/IONetworkingFamily.kext/Contents/PlugIns/Intel82574L.kext/Contents/Info.plist, you can see the device IDs that macOS supports with this driver:

<key>IOPCIPrimaryMatch</key> <string>0x104b8086 0x10f68086</string> <key>IOPCISecondaryMatch</key> <string>0x00008086 0x00000000</string>

Let’s make the card pretend to be that 8086:10f6 (primary ID) 0000:0000 (sub ID) card. To do this we need to edit some hostpci device properties that Proxmox doesn’t support, so we need to move the hostpci device’s definition into the “args” where we can edit it.

First make sure the hostpci entry for the network card in the VM’s config is the one with the highest index, then run

qm showcmd <your VM ID> --pretty. Find the two lines that define that card:...

-device 'pcie-root-port,id=ich9-pcie-port-6,addr=10.1,x-speed=16,x-width=32,multifunction=on,bus=pcie.0,port=6,chassis=6' \

-device 'vfio-pci,host=0000:0a:00.0,id=hostpci5,bus=ich9-pcie-port-6,addr=0x0' \

...Copy those two lines, remove the trailing backslashes and combine them into one line, and add them to the end of your “args” line. Now we can edit the second -device to add the fake PCI IDs (new text in bold):

-device 'pcie-root-port,id=ich9-pcie-port-6,addr=10.1,x-speed=16,x-width=32,multifunction=on,bus=pcie.0,port=6,chassis=6' -device 'vfio-pci,host=0000:0a:00.0,id=hostpci5,bus=ich9-pcie-port-6,addr=0x0,x-pci-device-id=0x10f6,x-pci-sub-vendor-id=0x0000,x-pci-sub-device-id=0x0000'

Now comment out the “hostpci5” line for the network card, since we’re manually defining it through the args instead. Now macOS should see this card’s ID as if it was one of the supported cards, and everything works!

- vga

- I need to set this to “none”, since otherwise the crappy emulated video card would become the primary video adapter, and I only want my passthrough card to be active.

-

- hookscript

- This is a new feature in Proxmox 5.4 that allows a script to be run at various points in the VM lifecycle.

- In recent kernel versions, some devices like my USB controllers are grabbed by the host kernel very early during boot, before vfio can claim them. This means that I need to manually release those devices in order to start the VM. I created /var/lib/vz/snippets/hackintosh.sh with this content (and marked it executable with chmod +x):

-

#!/usr/bin/env bash

if [ "$2" == "pre-start" ]

then

# First release devices from their current driver (by their PCI bus IDs)

echo 0000:00:1d.0 > /sys/bus/pci/devices/0000:00:1d.0/driver/unbind

echo 0000:00:1a.0 > /sys/bus/pci/devices/0000:00:1a.0/driver/unbind

echo 0000:81:00.0 > /sys/bus/pci/devices/0000:81:00.0/driver/unbind

echo 0000:82:00.0 > /sys/bus/pci/devices/0000:82:00.0/driver/unbind

echo 0000:0a:00.0 > /sys/bus/pci/devices/0000:0a:00.0/driver/unbind

# Then attach them by ID to VFIO

echo 8086 1d2d > /sys/bus/pci/drivers/vfio-pci/new_id

echo 8086 1d26 > /sys/bus/pci/drivers/vfio-pci/new_id

echo 1b73 1100 > /sys/bus/pci/drivers/vfio-pci/new_id

echo 144d a802 > /sys/bus/pci/drivers/vfio-pci/new_id echo vfio-pci > /sys/bus/pci/devices/0000:0a:00.0/driver_override echo 0000:0a:00.0 > /sys/bus/pci/drivers_probe fi

Guest file storage

The macOS VM’s primary storage is the passthrough Samsung 970 Evo 1TB NVMe SSD, which can be installed onto and used in Monterey. However since Monterey TRIM is broken, and SetApfsTrimTimeout needs to be set in my config.plist to disable it so it doesn’t slow down boot.

For secondary storage, my Proxmox host exports a number of directories over the AFP network protocol using netatalk.

Proxmox 5

Debian Stretch’s version of the netatalk package is seriously out of date (and I’ve had file corruption issues with old versions), so I installed netatalk onto Proxmox from source instead following these directions:

http://netatalk.sourceforge.net/wiki/index.php/Install_Netatalk_3.1.11_on_Debian_9_Stretch

My configure command ended up being:

./configure --with-init-style=debian-systemd --without-libevent --without-tdb --with-cracklib --enable-krbV-uam --with-pam-confdir=/etc/pam.d --with-dbus-daemon=/usr/bin/dbus-daemon --with-dbus-sysconf-dir=/etc/dbus-1/system.d --with-tracker-pkgconfig-version=1.0

Netatalk is configured in /usr/local/etc/afp.conf like so:

; Netatalk 3.x configuration file [Global] [Downloads] path = /tank/downloads rwlist = nick ; List of usernames with rw permissions on this share [LinuxISOs] path = /tank/isos rwlist = nick

When connecting to the fileshare from macOS, you connect with a URL like “afp://proxmox”, then specify the name and password of the unix user you’re authenticating as (here, “nick”), and that user’s account will be used for all file permissions checks.

Proxmox 7

Proxmox 7’s prebuilt version of Netatalk is good now, so I backed up my afp.conf, removed my old version that was installed from source (with “make uninstall”, note that this erases afp.conf!), and apt-installed the netatalk package instead. The configuration is now found at /etc/netatalk/afp.conf.

Proxmox configuration

Passthrough of PCIe devices requires a bit of configuration on Proxmox’s side, much of which is described in their manual. Here’s what I ended up with:

/etc/default/grub

... GRUB_CMDLINE_LINUX_DEFAULT="quiet intel_iommu=on rootdelay=10" ...

Note that Proxmox 7 will be booted using systemd-boot rather than GRUB if you are using a ZFS root volume and booting using UEFI. If you’re using systemd-boot you need to create this file instead:

/etc/kernel/cmdline (Proxmox 7 when using systemd-boot)

root=ZFS=rpool/ROOT/pve-1 boot=zfs intel_iommu=on rootdelay=10

/etc/modules

vfio vfio_iommu_type1 vfio_pci vfio_virqfd

/etc/modprobe.d/blacklist.conf

blacklist nouveau blacklist nvidia blacklist nvidiafb blacklist snd_hda_codec_hdmi blacklist snd_hda_intel blacklist snd_hda_codec blacklist snd_hda_core blacklist radeon blacklist amdgpu

/etc/modprobe.d/kvm.conf

options kvm ignore_msrs=Y

/etc/modprobe.d/kvm-intel.conf

# Nested VM support options kvm-intel nested=Y

/etc/modprobe.d/vfio-pci.conf

options vfio-pci ids=144d:a802,8086:1d2d,8086:1d26,10de:1c03,10de:10f1,10de:1380,1b73:1100,1002:6798,1002:aaa0 disable_vga=1 # Note that adding disable_vga here will probably prevent guests from booting in SeaBIOS mode

After editing those files you typically need to run update-grub (or pve-efiboot-tool refresh if you are using systemd-boot on Proxmox 7), update-initramfs -k all -u, then reboot Proxmox.

Host configuration

In the UEFI settings of my host system I had to set my onboard video card as my primary video adapter. Otherwise, the VBIOS of my discrete video cards would get molested by the host during boot, rendering them unusable for guests (this is especially a problem if your host boots in BIOS compatibility mode instead of UEFI mode).

One way to avoid needing to change this setting (e.g. if you only have one video card in your system!) is to dump the unmolested VBIOS of the card while it is attached to the host as a secondary card, then store a copy of the VBIOS as a file in Proxmox’s /usr/share/kvm directory, and provide it to the VM by using a “romfile” option like so:

hostpci0: 01:00,x-vga=on,romfile=my-vbios.bin

Or if you don’t have a spare discrete GPU of your own to achieve this, you can find somebody else who has done this online. However, I have never tried this approach myself.

Guest configuration

In Catalina, I have system sleep turned off in the power saving options (because I had too many instances where it went to sleep and never woke up again).

Launching the VM

I found that when I assign obscene amounts of RAM to the VM, it takes a long time for Proxmox to allocate the memory for it, causing a timeout during VM launch:

start failed: command '/usr/bin/kvm -id 100 ...'' failed: got timeout

You can instead avoid Proxmox’s timeout system entirely by running the VM like:

qm showcmd 100 | bash

Another RAM problem comes if my host has done a ton of disk IO. This causes ZFS’s ARC (disk cache) to grow in size, and it seems like the ARC is not automatically released when that memory is needed to start a VM (maybe this is an interaction with the hugepages feature). So the VM will complain that it’s out of memory on launch even though there is plenty of memory marked as “cache” available.

You can clear this read-cache and make the RAM available again by running:

echo 3 > /proc/sys/vm/drop_caches

1GB hugepages

My host supports 1GB hugepages:

# grep pdpe1gb /proc/cpuinfo

... nx pdpe1gb rdtsc ...

So I added this to the end of my /etc/kernel/cmdline to statically allocate 40GB of 1GB hugepages on boot:

default_hugepagesz=1G hugepagesz=1G hugepages=40

After running update-initramfs -u and rebooting, I can see that those pages have been successfully allocated:

# grep Huge /proc/meminfo

HugePages_Total: 40

HugePages_Free: 40

HugePages_Rsvd: 0

HugePages_Surp: 0

Hugepagesize: 1048576 kB

Hugetlb: 41943040 kB

It turns out that those hugepages are evenly split between my two NUMA nodes (20GB per CPU socket), so I have to set “numa: 1” in the VM config.

The final step is to add “hugepages: 1024” to the VM’s config file so it will use those 1024MB hugepages. Otherwise it’ll ignore them completely and continue to allocate from the general RAM pool, which will immediately run me out of RAM and cause the VM launch to fail. Other VMs won’t be able to use that memory, even if macOS isn’t running, unless they’re also configured to use 1GB hugepages.

You can also add “+pdpe1gb” to the list of the VM’s CPU features so that the guest kernel can use 1GB pages internally for its own allocations, but I doubt that MacOS takes advantage of this feature.

Notes

2019-12-19

Upgraded to 1GB hugepages. Proxmox 6.1 and macOS 10.15.2.

2019-03-29

My VM was configured with a passthrough video card, and the config file also had “vga: std” in it. Normally if there is a passthrough card enabled, Proxmox disables the emulated VGA adapter, so this was equivalent to “vga: none”. However after upgrading pve-manager to 5.3-12, I found that the emulated vga adapter was re-enabled, so OpenCore ended up displaying on the emulated console, and both of my hardware monitors became “secondary” monitors in macOS. To fix this I needed to explicitly set “vga: none” in the VM configuration.

2019-04-12

Added “hookscript” to take advantage of new Proxmox 5.4 features

2019-07-18

Did an in-place upgrade to Proxmox 6 today!

Proxmox 6 now includes an up-to-date version of the netatalk package, so I use the prebuilt version instead of building it from source. Don’t forget to install my new patched pve-edk2-firmware package.

2020-03-27

I upgraded to macOS 10.15.4, but even after updating Clover, Lilu and WhateverGreen, my R9 280X only gives me a black screen, although the system does boot and I can access it using SSH. Looking at the boot log I can see a GPU restart is attempted, but fails. Will update with a solution if I find one.

EDIT: For the moment I built an offline installer ISO using a Catalina 10.15.3 installer I had lying around, and used that to reinstall macOS. Now I’m back on 10.15.3 and all my data is exactly where I left it, very painless. After the COVID lockdown I will probably try to replace my R9 280X with a better-supported RX 570 and try again.

2020-04-27

Updated to OpenCore instead of Clover. Still running 10.15.3. pve-edk2-firmware no longer needs to be patched when using OpenCore!

2020-06-05

Replaced my graphics card with an RX 580 to get 10.15.5 compatibility, and I’m back up and running now. Using a new method for fixing my passthrough network adapter’s driver which avoids patching Catalina’s drivers.

2020-12-03

Added second NVMe SSD and 4x SSD carrier card

2021-07-07

Upgraded from 2x E5-2670 to 2x E5-2687W v2. Fusion 360 is now 30% faster! Added photos of my rig

2021-07-10

Updated to Proxmox 7, and Hackintosh is running fine without any tweaks

2021-12-23

For some reason by network adapter no longer wants to bind to vfio-pci using “new_id”, I had to use the “driver_override” approach instead in my hookscript snippet (updated above).

Nick, for us non Linux users/less experience. Could you answer in detail a bit more about scripts and snippets? Theres no beginners info out there with everyone just assuming you know things. So…

How do you actually create or copy over an existing hookscript? I have one to stop shutdown bug for my gfx. So a bit confused. where is the snippets folder – typically on my lvm drive? How do I create it? MKDIR /var/lib/vz/snippets ? or thats wrong?

Theres no UI so I was guessing command line? And do I use nano /var/lib/vz/snippets/name of .pl (perl script) and paste the script into it then save & make it executable – in this example ‘chmod +x reset-gpu.pl’? Could you give me a quick point by point walk thru?

Many thanks

You can put your snippets in any “directory” storage, my VM config puts it in the storage “local” which is found at /var/lib/vz, so I created a snippets directory in there with “mkdir /var/lib/vz/snippets”. Then yes, you can just “nano /var/lib/vz/snippets/hackintosh.sh” to create the file and “chmod +x /var/lib/vz/snippets/hackintosh.sh” to make it executable.

Hi Nick,

Thanks for your efforts. I am running macOS Catalina on my Proxmox now, with GPU passthrough enabled. But as you wrote, the monitor became secondary monitor if the Display uses vmware compatible.

When I change it to none, I don’t think my VM boots correctly. My monitor is blank. I already change the timeout to 5 seconds, so it should boot automatic to Catalina hard disc. But its blank.

Do you have any idea which settings I should check?

Thanks again

I’m having this same issue when passing through my Intel 530. VM conf below:

args: -device isa-applesmc,osk=”…” -smbios type=2 -device usb-kbd,bus=ehci.0,port=2 -cpu host,kvm=on,vendor=GenuineIntel,+kvm_pv_unhalt,+kvm_pv_eoi,+hypervisor,+invtsc

balloon: 0

bios: ovmf

boot: c

bootdisk: sata0

cores: 4

cpu: Penryn

efidisk0: hdd-img:104/vm-104-disk-1.raw,size=128K

hostpci0: 00:02,pcie=1,x-vga=1

machine: q35

memory: 8192

name: catalina

net0: vmxnet3=[removed],bridge=vmbr0,firewall=1

numa: 0

ostype: other

sata0: hdd-img:104/vm-104-disk-0.raw,cache=unsafe,discard=on,size=64G,ssd=1

scsihw: virtio-scsi-pci

smbios1: uuid=[removed]

sockets: 1

vga: none

vmgenid: [removed]

Hello Nick,

Could you please elaborate about the specifics of OpenCore config with R9 280 passthrough?

I’m not running Proxmox, just NixOS with virt-manager.

I’ve been able to boot Macos just fine with shared VNC, but passing through the GPU drops boot if the VNC is on of if VNC is off, VM just wont boot at all. No dmesg or qemu *.log info allow to locate the error, i suspect it is tied to the OpenCore.

Thanks!

Hi there,

No config is required, it just works. What exactly do you see on your screen during the boot sequence? (at what point does it go wrong?)

EDIT: You might be running into the same problem as me, which is that my R9 280X doesn’t seem to be supported any more as of 10.15.4. I’m staying on 10.15.3 for the moment until I can either figure out how to make it work by OpenCore configuration, or buy a Sapphire Radeon Pulse RX 580 11265-05-20G…

Well with VNC on I’m able to select my OS, then Apple logo with bar goes ~50% it reboots automatically.

Thanks Nick ! great material.

I recently followed the Proxmox/MacOs/Opencore post : https://www.nicksherlock.com/2020/04/installing-macos-catalina-on-proxmox-with-opencore/ and have it working.

One issue that I want to solve is to use audio, when I looked to my proxmox host I see the following hardware:

00:1f.3 Audio device: Intel Corporation 200 Series PCH HD Audio

Subsystem: ASUSTeK Computer Inc. 200 Series PCH HD Audio

Flags: bus master, fast devsel, latency 32, IRQ 179

….

Kernel driver in use: snd_hda_intel

Kernel modules: snd_hda_intel

In the proxmox web-interface I see the following options : intel-hda, ich9-intel-hda and AC97.

Most of the info that I found point to USB Passthrough and GPU/Audio, but how can use my onboard sound device ? Can someone point me to a guide ?

Thanks.

I don’t think macOS supports any of the QEMU emulated audio devices, or your onboard audio, though I’d be happy to be corrected.

Do you have any thoughts on getting an Intel HD 520 integrated graphics chip working in passthrough mode?

Here is my log and some links to another person who has tried:

https://gist.github.com/tachang/b659a98ce09554fa813dad2fbde98af2

Just kinda crashing near the end.

Sorry, my own machine doesn’t have integrated video so this is something I’ve never attempted. This line looks suspicious:

> GPU = S08 unknownPlatform (no matching vendor_id+device_id or GPU name)

Is this GPU supported by macOS? If it is, the chances are that iMacPro 1,1 is not an SMBIOS model that macOS is expecting that GPU to appear on, try an SMBIOS for a Mac model that had a similar iGPU.

Thank you so much for putting this together. This overview and the Installing Catalina with OpenCore is a complete game changer. In looking to improve my graphic performance, I bought and installed an AMD Sapphire Radeon RX 580 Pulse 8GB (11265-05-20G). I followed the PCI passthrough documentation and I see the card in my System Report, but it does not appear to be recognized at all. Anyone have any ideas one what I could be missing?

You probably need to set vga: none in your VM config – it sounds like currently the emulated video is primary.

Thank you. I figured that was the problem as well. I have tried setting vga: none, but then I have no access to the VM. I cannot console to it, no Remote Desktop. It is booted as best as I can tell, but it never appears on the network and does not pull an IP address. I will keep banging on it.

Thanks again for the guide.

You need a monitor or HDMI dummy plug attached for the video card to be happy, the driver will fail to start otherwise and this kills the VM.

Proxmox’s console relies on the emulated video, so that’s definitely gone as soon as you set “vga: none”, you need to set up an alternate remote desktop solution like macOS’s Screen Sharing feature.

Hey Nick.

First and foremost, I gotta say thanks for the efforts so far into getting this to work on Proxmox.

I’ve a slightly different setup than yours but most of it is the same. The two main differences is in the GPU and the USB card. I am using a RX 5700 and a Renesas USB 3 card.

I noticed that the upstream kholia/OSX-KVM added a mXHCD.kext to the repository and you did not add that to your configuration, I’m assuming that’s because the FL1100 card has native support.

I on the other hand am using a card that I bought from AliExpress that has 4 dedicated USB controllers that I can now split between my Windows VM and the MacOS VM. You can find more information at the card in the following point: https://forums.unraid.net/topic/58843-functional-multi-controller-usb-pci-e-adapter/?do=findComment&comment=868053

I had to change the IOPCIMatch in the card from 0x01941033 to 0x00151912. I think the first device id is for the Renesas uPD720200 and mine is for the Renesas uPD720202. I am glad to report that this works though.

However the card doesn’t show up in system report but using ioreg with:

> iorerg -p IOUSB -l -w 0

does show that the card is running at USB Super Speed.

I am assuming that the card doesn’t show up in system report due to the ACPI tables?

I have noticed some weird behavior with how it handles storage and with certain cables on how they work in one port but not the other, could be the way I’m connecting the devices but I’ll check again. Unplugging and plugging in a device seems to work fine. Based on some initial testing, it seems like the device is running at a quarter-past mark, kind of like 1.25Gbps speeds but that’s still better than USB2. Could be other factors but I’ll look into this more.

Hi , I See your work guys on unraid forums , its amazing ..

but as i know “correct me if i’m wrong” uPD720200 is not supported in Mac , only Fl1100 is supported ..

Did you make it work on MacOSX ?

I added the mXHCD.kext to get it to work. But like I mentioned in the Unraid forum, streaming data doesn’t seem to work. E.g. webcam/audio.

I am now using the quad channel FL1100 and it works without the need of any additional kext. It shows up properly in the system report unlike the Renesas chipset.

It does however have the reset bug but I guess if you’re passing through AMD GPUs, it’s not that big of a problem since you would have to reboot the host anyway.

Could you share the brand of fl1100 ?

As I notice that you buy 2 PC of different kit but you got same kit twice ?

Hi again,

I wanted to report back some observations from my setup:

– For me, there is no need to reserve any PCI devices, other than GPUs, via vfio for later passthrough to a VM. Like you, I pass through network and USB PCI devices. Proxmox (I am on the most current version) seems to be able to assign them to a VM anyway when the VM is launched. The Proxmox online documentation also seems to only recommend the vfio approach for GPUs (and not for other PCI devices).

– For the GPU, in my case, it is sufficient to reserve them via vfio; I don’t blacklist the respective drivers

– I reserve the GPUs via vfio in the kernel command line (not in modprobe.d). One is probably as good as the other, but my thought was (during initial setup) that if anything doesn’t work, I can edit the kernel command line easily during grub boot. This helped me recover the system more than once when I tried to reserve the host’s primary GPU via vfio (didn’t work and I ended up “sacrificing” a third GPU just for the host to claim so that the other GPUs remain free for passthrough)

– While my High Sierra VM with the NVIDIA web drivers seems to work relatively flawlessly, it is not exactly future proof. So I will follow your lead and try a Sapphire Radeon RX 580 Pulse (I don’t need super gaming power). The issues you are describing with it resemble the problems I had when I tried a FirePro W7000. I hope they don’t come back with the RX 580. Once I have the RX 580, I will set up one VM with Catalina and another one with your Big Sur guide.

Thanks for your great tutorials!

Hi again! Thanks for the detailed guide! I’ve already set up a guest with vmware vga enable! However, I stucked when trying GPU passthrough. I have changed plist settings so that it will auto boot after 3s timeout and this works fine when using vmware vga. However, my monitors can not receive any signal when I switched to passthroughed gpu (according to the guide, configured gpu passthrough settings in host, set display to none, and assign pci devices to guest). Since there is no vga, I can not figure out whether the guest boot or not. For more information, I tried enable vmware vga and passthoughed gpu together, it ends up display shows desktop but no tool bar nor dock is shown. Following is my configuration, would you please help me figure out what’s wrong with my configuration or setup? Thanks a lot in advance!

Host Spec:

cpu: 8700k

mb: asus prime b360-plus

mem: 32GB

hdd: 3T

PVE version: 6.1-3 (OVMF patched for deprecated clover guides)

Guest Spec:

cpu: 4cpus cores

mem: 16GB

ssd: 250GB 860EVO (passthroughed)

GPU: RX580 (passthroughed)

USB: mouse / keyboard (device passthroughed)

OC Version: V5

monitor socket used for guest: hdmi + dp

Guest configs:

args: -device isa-applesmc,osk=”***(c)***” -smbios type=2 -cpu Penryn,kvm=on,vendor=GenuineIntel,+kvm_pv_unhalt,+kvm_pv_eoi,+invtsc,vmware-cpuid-freq=on,+pcid,+ssse3,+sse4.2,+popcnt,+avx,+aes,+xsave,+xsaveopt,check -device usb-kbd,bus=ehci.0,port=2

balloon: 0

bios: ovmf

boot: cdn

bootdisk: virtio2

cores: 4

cpu: Penryn

hostpci0: 01:00.0,pcie=1,x-vga=1

hostpci1: 01:00.1,pcie=1

hostpci2: 00:14,pcie=1

machine: q35

numa: 0

ostype: other

scsihw: virtio-scsi-pci

sockets: 1

vga: none

When the emulated vga is turned on it becomes the primary display, and the menu bar and dock ends up going there. You can drag the white bar in the Displays settings between screens to change this.

This is wrong;

hostpci0: 01:00.0,pcie=1,x-vga=1

hostpci1: 01:00.1,pcie=1

It needs to be a single line like so:

hostpci0: 01:00,pcie=1,x-vga=1

Sometimes the passthrough GPU will not fire into life until you unplug and replug the monitor, give that a go.

Thanks for the reply. I modified the config as you told me, but gpu still not works. After tried many times (including unplug and replug the monitor), I find out that gpu only works when emulated vga is turned on. Do you have any idea why this is happening?

You can try editing OpenCore’s config.plist to add agdpmod=pikera to your kernel arguments (next to the debugsyms argument). I think you can try generic Hackintosh advice for this problem because I suspect it happens on bare metal too.

It doesn’t have this issue on my RX 580

Have you solve this situation, I also have encountered this problem

Hey there! I was following your guide and seem to have encountered a blocker.

I’ve got Proxmox 6.2 installed on an old Dell R710 with dual Xeon X5675 cpus, which support SSE 4.2 from what Intel’s website says. I’ve only using 4 cores from a single cpu in my setup as seen below.

I’m at the point where I’m booting up using the Main drive for the first time to finish up the install, and the loader gets near the end and then the VM shuts down. Looking at dmesg, I see kvm[24160]: segfault at 4 ip 00005595157fa3b7 sp 00007f31c65f2e98 error 6 in qemu-system-x86_64[5595155d9000+4a2000].

My 101.conf is below (with osk removed):

“`

args: -device isa-applesmc,osk=”…” -smbios type=2 -device usb-kbd,bus=ehci.0,port=2 -cpu host,kvm=on,vendor=GenuineIntel,+kvm_pv_unhalt,+kvm_pv_eoi,+hypervisor,+invtsc

balloon: 0

bios: ovmf

boot: cdn

bootdisk: ide2

cores: 4

cpu: Penryn

efidisk0: local-lvm:vm-101-disk-1,size=4M

ide0: freenas-proxmox:iso/Catalina-installer.iso,cache=unsafe,size=2095868K

ide2: freenas-proxmox:iso/OpenCore.iso,cache=unsafe

machine: q35

memory: 32768

name: mac

net0: vmxnet3=[removed],bridge=vmbr0,firewall=1

numa: 0

ostype: other

sata0: local-lvm:vm-101-disk-0,cache=unsafe,discard=on,size=64G,ssd=1

scsihw: virtio-scsi-pci

smbios1: uuid=[removed]

sockets: 1

vga: vmware

vmgenid: [removed]

“`

Try the -cpu penryn alternative mentioned in the post and let me know what warnings it prints when you start the VM from the CLI (like qm start 100) and if it fixes it or not.

Hi Nick,Thanks for the detailed guide.

Can we enable multiple resolution support in High Sierra ? I have tried all the options whichever are available in the proxmox display section but that did not work. I can see only one resolution in the High Sierra VM scaled list i.e 1920*1080. Please suggest how I can add more resolutions to this list(Settings>Displays>Scaled).

The proxmox server version is 6.2(Dell Server R810).

Using the emulated video adapter? I don’t think it supports multi resolution. If you’re using OpenCore you need to mount your EFI partition to edit your config.plist, that’s where the resolution is set.

Nick,

I also prefer using the macOS for my regular activities. And I now have a Catalina VM thanks to your awesome installation how-to.

A simple noob question: Since macOS is your daily-driver, how do you boot and view it?

I’ve been trying noVNC, Microsoft Remote Desktop, Apple Remote Desktop, and NoMachine connections from my Mac. They each seem a little awkward with a few difficulties.

Your thoughts appreciated.

Since I’m using a passthrough GPU, macOS outputs directly to two connected monitors.

Hey Nick, my build is refusing to boot the VM correctly if the HDMI is plugged into the passthrough GPU during PVE bootup. The VM macOS 10.15.6 will show the Apple logo without loading bar and then reboot and then show the error screen “Your computer restarted because of a problem” repeatedly. However, once PVE is at login, I can then plug in the HDMI to the passthrough GPU and then start my VM normally. What gives?

I have my vfio.conf file listing my device IDs (VGA and audio) and the drivers are in the blacklist.conf file. I only passthrough VGA to the VM (01:00.0) as I’ve read there can be issues passing through audio along with it. Could that be the crux?

Posted on Reddit but no replies yet.

This is not a super unusual problem to have with a passthrough GPU, I’ve seen it on a couple of systems. Which GPU model are you using?

If you open up the Console app in macOS you might possibly find some Crash Reports on the left that describe the problem, or if not it may be being logged elsewhere.

I had the same issue. RX570, the apple logo would show up but there were 2 blinking gray artifacts at the top of the screen, and after ~15 seconds a quarter of the screen had artifacts. Then my display went off, flashed green, and proxmox restarted, but with green and pink colors instead of the normal orange and white ones.

Rebooting the pve and waiting to plug in the hdmi cord until it had booted solved all these artifacts. I also had to set the primary graphics of my motherboard to IGFX, to default to the onboard display.

Hey Nick,

Thanks for this great info! Question – I’m hoping to use a smaller host OS disk for MacOS and add a secondary drive on a different (HDD) storage – but iCloud Photo Library won’t allow cloud sync to a disk that is network attached. Is there any way to ‘add’ an NFS or Samba share into the VM but make it appear as a local external disk?

Currently I’m stuck adding an extra SSD to Proxmox just to have a huge space for the Photo library while still running the core OS off SSD for speed reasons. My Proxmox host install SSD is too small to accomodate the photos, and HDD host performance isn’t great either.

Thanks for any thoughts.

Hello Nick,

Care to explain a bit about how are you doing the passthrough for the ssd?

I’ve followed this guide, and while macos boots from the ssd, it is still detected ass QEMU HARDDISK, I don’t know if this affects the performance. I’m not sure if I should enable the “ssd emulation” check from the disk configuration page.

https://pve.proxmox.com/wiki/Physical_disk_to_kvm

Thanks!

My SSD is NVMe/PCIe, so I’m passing it through directly using PCIe passthrough (hostpciX).

Your setup will work fine, it’s the best option if you have a SATA SSD. You can edit your config file to replace your sata1 (or whatever it’s called) with virtio1 to get increased performance (because virtio block is faster than the emulated SATA).

Checking SSD emulation lets the guest know that the drive is an SSD, which some operating systems use to enable TRIM support or to turn off their automatic defrag. virtio doesn’t offer the “ssd” option, so make sure that’s not included if you switch from sata to virtio. While you’re there, also tick Discard because it’s required for TRIM to operate.

Hello Nick,

Your post have many important topics at to create a VM.

But today, you got to add webcam and microphone na VM?

I am running the Catalina with Qemu and Clover, but this drivers not showed.

Can you help me about add this drivers in VM ?

Thanks,

A webcam would be connected to the guest by USB, so you’d want to add it as a USB device. Most webcams should just work without an additional macOS driver.

Hi Nick,

Thanks to reply. I am using a laptop with webcam, but in Catalina VM this webcam not is showed. I tryed to add the mobile webcam (motorola Moto G4 Play) and too don’t functionally.

Maybe I am doing wrong form. You have another suggest for me ?

Hi there. Thanks for the great tutorials, Nick!

I’m a bit stuck, and wonder if anyone has any ideas.

The Mac VM will boot half way (Apple logo and progress bar 50%) before the screen goes black, the fans on the GPU spin up and don’t turn off until I reboot the PVE, and the VM freezes. Powering off and back on via the web GUI doesn’t seem to bring it back. I need to shut down the host and start over again to quiet those GPU fans.

Proxmox 6.2-15

GPU AMD Sapphire Radeon RX 580 Pulse 8GB (11265-05-20G)

I’m having the experience that others have noted where I have to wait to plug in the monitor attached to the GPU until after the PVE has booted, because it looks like otherwise my host claims it at boot despite the blacklist.

The following are all set exactly per the guide:

/etc/default/grub

/etc/modules

/etc/modprobe.d/blacklist.conf

/etc/modprobe.d/kvm.conf

/etc/modprobe.d/kvm-intel.conf

/etc/modprobe.d/vfio-pci.conf

I have edited this file as follows:

options vfio-pci ids=1002:67df,1002:aaf0

**I did not set disable_vga=1 As I have other SeaBIOS guests

Here’s my [redacted] VM configuration file

args: -device isa-applesmc,osk=”[redacted]” -smbios type=2 -device usb-kbd,bus=ehci.0,port=2 -cpu host,kvm=on,vendor=GenuineIntel,+kvm_pv_unhalt,+kvm_pv_eoi,+hypervisor,+invtsc

balloon: 0

bios: ovmf

boot: order=sata0;net0

cores: 16

cpu: Penryn

efidisk0: local-lvm:vm-102-disk-1,size=4M

hostpci0: 01:00,pcie=1,x-vga=1,romfile=Sapphire.RX580.8192.180719.rom

machine: q35

memory: 24576

name: proxmoxi7-catalina

net0: vmxnet3=[redacted],bridge=vmbr0,firewall=1

numa: 0

ostype: other

sata0: local-lvm:vm-102-disk-0,cache=unsafe,discard=on,size=300G,ssd=1

scsihw: virtio-scsi-pci

smbios1: uuid=[redacted]

sockets: 1

vga: none

vmgenid: [redacted]

Appreciate anyone’s help!

Thanks

Do you have another GPU in the system which is set as primary? If not, even Proxmox using it for its text console may be enough to raise the ire of the AMD Reset Bug. You can avoid that by adding video=efifb:off to your kernel commandline.

You can check /var/log/kern.log to see what went wrong at the hangup. If it’s like my RX 580 there will be messages there about waiting for it to reset, then complaints about deadlocked kernel threads.

If you have been doing warm reboots, try full hard host poweroffs to guarantee a complete card state reset. I once wasted an entire afternoon debugging a passthrough problem that was solved by this.

Thanks for the response!

I have one Radeon GPU and the onboard Intel GPU.

I set my BIOS to allow dual monitors so that I could pass through the Intel GPU successfully to a Ubuntu server that’s hosting dockers (otherwise my motherboard disables it when the GPU is present). The Radeon is the only other GPU in the system. My BIOS has the PEG set as primary.

I’ll try adding to the kernel command line – thank you.

To confirm: does ‘video=efifb:off’ go at the end here –> ‘/etc/default/grub’ , so that the final full text is:

GRUB_CMDLINE_LINUX_DEFAULT=”quiet intel_iommu=on rootdelay=10 video=efifb:off”

There is so much action in my log file from all my prior mistakes that I can’t even begin to decipher it, as it overprints my screen!

Yeah that’s the correct final text. Note that Proxmox won’t print anything to the screen at all after boot begins so make sure you have a way to administrate it remotely.

You may have to disable that multi display option in your host settings to prevent the host initialising the card, but I reckon you’ll get away without it.

Thanks! I’ve got kids using the server so need them to finish up a movie and will take it for a spin!

My server usually runs headless, so no problem on ssh’ing in to manage. Appreciate the heads up.

Hopefully I won’t have to disable the multi display as I’ll lose the quick sync hardware transcoding on my Plex.

Thanks again. I’ll report back my findings.

No joy. Same crash half way through the boot. I’ll try to disable the dual monitor in the bios and see what happens.

Is this what I’m looking for in the logs?

Nov 7 18:01:23 proxmoxi7 kernel: [ 162.034363] vfio-pci 0000:01:00.0: enabling device (0002 -> 0003)

Nov 7 18:01:23 proxmoxi7 kernel: [ 162.034584] vfio-pci 0000:01:00.0: vfio_ecap_init: hiding ecap 0x19@0x270

Nov 7 18:01:23 proxmoxi7 kernel: [ 162.034589] vfio-pci 0000:01:00.0: vfio_ecap_init: hiding ecap 0x1b@0x2d0

Nov 7 18:01:23 proxmoxi7 kernel: [ 162.034592] vfio-pci 0000:01:00.0: vfio_ecap_init: hiding ecap 0x1e@0x370

I’m really trying to avoid changing the BIOS. Just an update so I can track what I’ve tried.

Here are the kernel commands I’ve tried, each with the same result (crash half way into booting, with GPU fans spinning up):

GRUB_CMDLINE_LINUX_DEFAULT=”quiet intel_iommu=on”

GRUB_CMDLINE_LINUX_DEFAULT=”quiet intel_iommu=on rootdelay=10″

GRUB_CMDLINE_LINUX_DEFAULT=”quiet intel_iommu=on video=efifb:off”

GRUB_CMDLINE_LINUX_DEFAULT=”quiet intel_iommu=on rootdelay=10 video=efifb:off”

GRUB_CMDLINE_LINUX_DEFAULT=”quiet intel_iommu=on pcie_acs_override=downstream,multifunction video=efifb:eek:ff”

I seem to definitely need the romfile in my voicemail configuration, otherwise I don’t even get to the half-booted screen on the Radeon card; the monitor never comes online.

hostpci0: 01:00,pcie=1,x-vga=1,romfile=Sapphire.RX580.8192.180719.rom

I downloaded the rom from the link in the post, it’s not one I’ve dumped myself.

I think BIOS is the next option, and am planning on two options:

1. change the primary from the GPU to the onboard

2. If that doesn’t work, disable the dual monitor and see what happens

>video=efifb:eek:ff

This one is actually “efifb:off” that has been garbled by a forum that turns “:o” into an “:eek:” emoji when copied and pasted as text (thanks to MikeNizzle82 for solving this mystery: https://www.reddit.com/r/VFIO/comments/jlxqzs/kernel_parameter_what_does_videoefifbeekff_mean/ ). It’s funny how far this nonsense text has spread on the internet.

Double check that your cmdline edits are really being seen by running “cat /proc/cmdline” to see the booted commandline.

You absolutely want to set the primary to the onboard, that’ll be why Proxmox was always grabbing the GPU despite blacklisting the driver.

Those ones are okay, I was expecting to see errors like:

kernel: [259028.473404] vfio-pci 0000:03:00.0: vfio_ecap_init: hiding ecap 0x1b@0x2d0kernel: [261887.671038] pcieport 0000:00:02.0: AER: Uncorrected (Fatal) error received: 0000:00:02.0

kernel: [261887.671808] pcieport 0000:00:02.0: AER: PCIe Bus Error: severity=Uncorrected (Fatal), type=Transaction Layer, (Receiver ID)

kernel: [261887.672445] pcieport 0000:00:02.0: AER: device [8086:3c04] error status/mask=00000020/00000000

kernel: [261887.673063] pcieport 0000:00:02.0: AER: [ 5] SDES (First)

kernel: [261981.618083] vfio-pci 0000:03:00.0: not ready 65535ms after FLR; giving up

I get this halfway through guest boot, at the point where macOS tries to init the graphics card. (00:02.0 is a PCIe root port and 03:00 is the RX 580)

That’s pretty funny about the emoji – no wonder it didn’t work!

Unfortunately, neither did changing the bios (first to make the onboard the primary, and then going back to Radeon but with dual monitor disabled).

I crash as the same place every time.

the edits to appear to be loading.

Wonder if it’s my ROM file. I may need to look into dumping my own. It’s interesting though, as others don’t seem to need to do that with this card.

In theory if the GPU is never initialised, the vBIOS is unmolested and so you don’t need to supply a ROM file. This is the situation I have on my machine, and so I’ve never manually supplied a ROM file. But this relies on the host BIOS never touching the card, which sounds like it’s impossible with your BIOS due to the iGPU disabling thing you mentioned.

(My motherboard has an onboard VGA controller, which is fantastic for a text Proxmox console whilst keeping grubby hands off of my discrete GPUs)

ok, not sure what combination mattered, but it’s working! Here are my final settings:

.conf file (**removed the romfile):

hostpci0: 01:00,pcie=1,x-vga=on

grub (**back to the original):

GRUB_CMDLINE_LINUX_DEFAULT=”quiet intel_iommu=on rootdelay=10″

vfio-pci (**added the disable_vga=1):

options vfio-pci ids=1002:67df,1002:aaf0 disable_vga=1

BIOS set primary to integrated graphics (didn’t work before, but does now!).

And HW transcode working from the onboard Intel GPU in the Ubuntu server (had to remove and re-add the PCI in the PVE web GUI).

Thanks so much!!

Sweet as!

Hi Nick,

Thanks for the guides here. I was able to passthrough a GT 710 card on my Dell R710 (dual L5640s). I also have keyboard and mouse working, simply by adding the hardware in Proxmox webgui. Woohoo!

I am having a bit of a struggle getting my dual NIC PCIe card to show up in the VM. I’ve added the hardware, again — through the gui. I’ve also found a relevant kext for the card (I mounted the EFI partition and dropped it into the kext folder), but nothing seems to show up once booted. What can I do to check 1) if Proxmox is properly passing the PCI device to the VM and 2) if the VM is seeing the device. Maybe I’ve injected the kext incorrectly?

Proxmox shows the PCIe NIC at 06:00, you’ll see that in the vm config:

06:00.0 Ethernet controller: Intel Corporation 82576 Gigabit Network Connection (rev 01)

06:00.1 Ethernet controller: Intel Corporation 82576 Gigabit Network Connection (rev 01)

Here’s my config:

args: -device isa-applesmc,osk=”…” -smbios type=2 -device usb-kbd,bus=ehci.0$

balloon: 0

bios: ovmf

boot: cdn

bootdisk: virtio0

cores: 8

cpu: Penryn

efidisk0: local-zfs:vm-143-disk-3,size=1M

hostpci0: 07:00,pcie=1,x-vga=1

hostpci1: 06:00,pcie=1

machine: q35

memory: 12288

name: MAC1

net0: vmxnet3=…,bridge=vmbr0,firewall=1

numa: 1

ostype: other

scsihw: virtio-scsi-pci

smbios1: uuid=…

sockets: 1

usb0: host=1-1.1

usb1: host=1-1.2

vga: vmware

virtio0: local-zfs:vm-143-disk-2,cache=unsafe,discard=on,size=64G

vmgenid: …

…now that I paste this here, I see a bunch of args that you suggest are missing. I might tweak those too while I’m at it, but the machine does run with this config (minus the passthrough NIC)!

It’s unlikely the passthrough config itself is the issue, because most errors here cause the VM to fail to launch. But you can launch the VM on the commandline like “qm start 143” to see any warnings (check dmesg output too after the launch).

Which kext did you load? In theory you shouldn’t have to do this since OpenCore bypasses SIP, but you might need to disable SIP to allow an unsigned kext to be loaded: From the boot menu hit the Recovery option, then from the recovery environment open up Terminal from the top menu and enter “csrutil disable”, then reboot.

Oh dear. I managed to break Proxmox by trying to add hugepages and then removing them when it didn’t work.

I made a backup of /etc/kernel/cmdline and then added the “default_hugepagesz=1G hugepagesz=1G hugepages=64”

ran update-initramfs -u and rebooted

after my VM didn’t start up I tried to undo by reverting to the original “cmdline” and ran update-initramfs -u

Then I got this:

https://drive.google.com/file/d/16iHp77aLBI7uWwhiJuyFlz-rKvhmgiWq/view?usp=sharing

And rebooting proxmox shows an error and returns back to BIOS screen. I shouldn’t have done this, obviously.

How can I fix this mess?

You can boot from the Proxmox installer using the “rescue boot” option to fix things up.

The error looks like the boot partition is not mounted, you may need to mount that manually.

It actually turned out that the culprit was one of my RAM modules. After removing the bad module pair things went back to normal.

I tried out hugepages and didn’t notice any improvement when working with RAM intensive Applications, like After Effects which can use up 50+ GB easily when working with 4K footage. There is some lag and stutter after the video has loaded into RAM until it playing back smoothly. I was hoping hugepages would improve on that.

Could you explain what the benefit of using hugepages is?

Hi Nick,

great work.

I´m trying to achieve quite the same goal but I´m running into the following problem.

Im using an MacPro 5.1. Im running BigSur on FusionDrive through Proxmox 6.3-2. I got it all running with GPU Passthrough (GeForce RTX 770 with MacBios). But when set vga: to none the vm refuses to boot. It is going to 100% on one vCore forever.

To get it booting again I have to add my stock MacPro GPU (GeForce 9500 GT). Then I get a bootscreen on the GT.. Later it switches to the RTX770 and runs well.

Any ideas what the problem is?

Greetings hackpapst

Thanks for the awesome guide. and it empowers me to build a Unified Workstation (Win, macOS, Linux) during the 2020 year-end holiday.

The Unified Workstation has 3 “Hybrid” VMs. Each VMs have a GPU passthrough and a USB Controller passthrough. These 3 hybrid VMs is able to be controlled by the same keyboard and the same mouse.

You may check on what has you inspired at my newly setup website.

Be safe.

I´m trying to apply the method you used in this post to fake the id of your network card into my vm .conf file, but to fake the id of the graphics card.

It´s being a pain in the ass.

Hi Nick, great content and info! I’ve been keeping an eye on this for a while but haven’t taken the plunge since my edge case requirements haven’t been met with current technologies and caveats, but it is getting fairly close now!

I’m looking to do a similar setup, however I’m hoping with recent paravirtualisation developments on vGPU partitioning (https://www.youtube.com/watch?v=cPrOoeMxzu0 -craft computing) would remove the need for dual GPU discrete cards for either Mac or Win VMs (for thermal and server PC size determinants).

Would it be possible to ‘live-switch’ between say 2 or more (Mac+Win) VM’s if both are running simultaneously; perhaps instead of passing through mouse and keyboard directly to a guest, it was passed via a software kvm like barrier or sharemouse? I’m guessing there are still heavy caveats when trying for an all-in-one solution on Proxmox (which is my dream goal). I’m unsure if a headless Proxmox server with ‘passthrough hooks’ or a dedicated Linux host with QEMU/KVM derived guest VM’s is the way to go.

My use case would be to isolate windows for pure gaming only (w/ vulkan, open gl support), Mac for multimedia work & web browsing, music (I know nVidia 10 series cards work great if using High Sierra only – enough for me – https://www.youtube.com/watch?v=xo4KGZMswYs) combined with a storage service (i.e ZFS) that delivers a management scenario for backing up and cloning VM’s.

What was your need for going headless route if you’re not clustering this build? Was it mainly to manage your MacOS VM, cloning and testing etc.? Do you game casually or use a Windows VM with GPU passthrough? I’m guessing you might if you have 2 GPU’s but you never mention anything Windows related 😉

Thanks again and keep up the great work.

Yes, Barrier works nicely for this usecase, I’ve passed through my keyboard and mouse to macOS, and then shared those over to Windows using Barrier before. Potentially the input devices could be passed through with evdev passthrough (which allows easy bind/unbind), but I’m not sure if that requires a desktop environment to be running on the host to provide those events.

High Sierra is out of support and no longer receives security updates, and macOS software vendors are pretty quick to drop support for these old versions. I don’t think this is a sustainable option.

Going virtual for macOS meant that I didn’t need to set up Clover/OpenCore for my host hardware, since the VM only needs to see the emulated hardware (except the CPU and GPU). This guaranteed that I could get macOS working smoothly. It also meant I could easily roll back the VM when operating system updates broke the Hackintosh (although now since I PCIe passthrough an NVMe disk to macOS, I don’t have snapshot capability for that one any more). And I could run ZFS on my host to manage all my files for me and use them from any of my VMs (macOS didn’t have any ZFS support at all at that time).

I game on Windows with GPU passthrough, I have two GPUs.

Howdy! Found your guide via the ole’ Google and used it to setup a Proxom VM. I want to passthrough a RX 580 now, but I’m dying at “[PCI configuration begin].” Any chance you could post your OpenCore config.plist? Thanks so much!

I didn’t edit it, it’s the same as my published OpenCore image plus a line for marking my network as built-in.

v13 is the one you are using?

Thanks!

I was using v13 but I’ve just switched to and released a new v14, which works too.

I have been beating my face against this for several weeks now. I went back and used arch and got everything working, then I decided to try Promox 6.4 instead of 7. Worked the first time. So anyway if you figure out 7 I’ll be super curious. Thanks so much again!

I’m using the RX 580 on Proxmox 7 and it works fine, so there’s no fundamental problem with 7 and the RX 580. More likely a passthrough configuration problem like the host is initialising the RX 580 during its own boot (the RX 580 has the AMD Reset Bug)

Well, all of the info I needed was on this page if I had just spent all of the time to read it instead of using the ole’ Google. The vendor-reset module made the situation worse, but disabling the framebuffer with video=efifb:off got me right as rain. Grabbed a headless HDMI 1080p dongle off of Amazon. Thanks os much for your help and responsiveness Nick! Where is your tip-jar?

Good to hear! You can send tips to my PayPal at n.sherlock@gmail.com

Has anyone played with VmAssetCacheEnable? Love to get the Content Caching working. https://github.com/ofawx/VmAssetCacheEnable

NM. I’m illiterate. https://github.com/ofawx/VmAssetCacheEnable/issues/1 😀 Cheers all!

Hi Bob, that issue has been resolved! Should work fine now.

Hello, thanks for all the precious infos, reading this page i see you are using lightroom. After installing big sur on proxmox and migrating my hackintosh user, im unable to load any of my lightroom catalog, have you experienced something similar ?

Do you have a passthrough GPU attached? I bet Lightroom will want a GPU for acceleration.

What errors are you getting?

Any info how to passthrough HD Audio controller? I have an onboard one, but it is not detecting in BigSur.

After passing it through using PCIe passthrough, see this guide:

https://dortania.github.io/OpenCore-Post-Install/universal/audio.html

Hello Nick, Thank you for your detailed guides and the time you spend writing them. It is highly appreciated. 🙏🏼

I am new to this and was wondering what file system you are using for the root drive and why? From what I read, not everyone is using ZFS.

Thank you very much in advance. 🙏🏼

I’m using ZFS on all my drives including the root disk because its snapshot and integrity checking tools are second to none.

On Proxmox specifically the big win of having a ZFS root disk (compared to LVM) is that you don’t need to statically allocate space for the root filesystem, it shares its space freely with the rest of the disk and will grow or shrink as needed. This makes it the clear front-runner for single-volume setups in my opinion.

Proxmox has solved the awkward issue seen on other distros where the root filesystem can only use a limited subset of ZFS features (due to GRUB’s limited ZFS implementation) by using systemd-boot with EFI instead. This uses a full Linux kernel as the bootloader so it has no restrictions on what filesystems it can mount.

Thank you very much for your quick reply Nick.

I followed your guide and advice and virtualized MacOS on a 4x 1TB SSD RaidZ1 configuration. The read and writes is was getting within the VM were all over the place, 450 – 8000 MB/s. Maybe it’s just me but I felt the system was less responsive than a bare metal SSD install. Is there a way to tune ZFS for ‘more responsiveness’?

NVMe drives come to mind but from what I read, ZFS would wear those out very quickly. Have you come across anyone who used a NVMe LVM RAID combination to run VM’s on Proxmox?

Many thanks in advance 🙏🏼.

The peak speeds you’re seeing there are just writes to the host’s memory cache. If you want a more consistent throughput then you can disable host-caching entirely in your VM disk options, which’ll put that responsibility into the guest instead (this’ll cap that 8000MB/s fictional speed you saw on the top end).

ZFS doesn’t really do anything special that would ruin NVMes, make sure you’re not looking at old advice from before ZFS supported TRIM.

On my system I PCIe passthrough an entire NVMe SSD directly to macOS, so macOS has full raw access to it and has the maximum possible performance. (I do lose the ability to snapshot and rollback the guest, however). I helped someone set up a macOS VM that had 4 NVMes in a Thunderbolt enclosure, and they PCIe-passthroughed all 4, and RAID0’d them within macOS (not possible with the boot disk though), and the performance was eye-watering.

For anyone using ZFS with large amounts of memory allocated to any of their VMs, I wrote a simple hookscript to clear the read-cache as Nick mentioned with:

echo 3 > /proc/sys/vm/drop_caches

Here’s the script:

#! /usr/bin/env bash

# /Storage/VM/snippets/drop_caches.sh

VM_ID=$1;

EXECUTION_PHASE=$2

if [[ “$EXECUTION_PHASE” == “pre-start” ]]; then

/usr/bin/echo 3 > /proc/sys/vm/drop_caches;

/usr/bin/echo “dropped caches before starting VM $VM_ID at `date +%T` on `date +%D`” > /var/log/drop_caches_hook.log

fi

Just copy and paste it into a document and save it as drop_caches.sh then add it to /var/lib/vz/snippets or wherever Proxmox knows you keep snippets. You can add a snippet location in the WebUI by going to Datacenter>Storage and adding new storage or editing existing storage to allow snippets. Then just chmod +x drop_caches.sh in your snippet snippet location.

You can then assign it to a VM by doing:

qm set YOURVMID –hookscript NAMEOFSTORAGE:snippets/drop_caches.sh

for example:

qm set 100 –hookscript local:snippets/drop_caches.sh

Hope this helps someone out there. Nick, feel free to keep or modify this and add it to your guide if you think it’s useful.

After I did this thing with dumping the VBIOS nothing works anymore. Display connected to host doesnt receive anything, promox wont connect to host. Linux wont boot, no access to BIOS. Yet all fans are running, blinking motherboard. Tried different gpu orders on pci 1 and 2, trying 2 GPUs, nothing. Any hints how to save me from this unfortunate condition?

Thanks!

sorry, nevermind, reset my cmos on the motherbard, now I can boot again

Hi,

i am running on an odd problem and was wondering if anyone has an idea why this may be.

The Monterey VM works ok without the GPU passthrough. I have an Sapphire Radeon Pulse RX 560 which has metal support and works in a native Opencore configuration with Monterey.

I followed the setup for the passthrough, blacklisted the drivers, the procedure for the vendor-reset, the vfio.conf.

echo “options vfio-pci ids=1002:67ff,1002:aae0 disable_vga=1”> /etc/modprobe.d/vfio.conf

When i add the PCI passthrough from the proxmom web interface and select the GPU id from the list the VM starts and the display starts showing in intervals the following:

[60.0234623] usb usb2-port1: Cannot enable. Maybe the USB cable is bad?

[64.2467447] usb usb2-port1: Cannot enable. Maybe the USB cable is bad?

and keeps on repeating.

Removing the GPU passthrough the VM works fine.

But i am not passing through the USB, just the GPU. Why is this error coming up about USB? This is a Linux kernel error, so i guess it does not reach the point to go in the bootloader of the VM.

Verify your IOMMU groups to check what devices are in the same group as the GPU: https://wiki.archlinux.org/title/PCI_passthrough_via_OVMF#Ensuring_that_the_groups_are_valid

Hi Nick,

many thanks for the detailed guide and for the reply.

Seems that the GPU is alone in group 8.

root@pve:~# ./iommu_groups.sh

IOMMU Group 0:

00:00.0 Host bridge [0600]: Intel Corporation Device [8086:9b43] (rev 05)

IOMMU Group 1:

00:01.0 PCI bridge [0604]: Intel Corporation 6th-10th Gen Core Processor PCIe Controller (x16) [8086:1901] (rev 05)

IOMMU Group 2:

00:12.0 Signal processing controller [1180]: Intel Corporation Comet Lake PCH Thermal Controller [8086:06f9]

IOMMU Group 3:

00:14.0 USB controller [0c03]: Intel Corporation Comet Lake USB 3.1 xHCI Host Controller [8086:06ed]

00:14.2 RAM memory [0500]: Intel Corporation Comet Lake PCH Shared SRAM [8086:06ef]

IOMMU Group 4:

00:16.0 Communication controller [0780]: Intel Corporation Comet Lake HECI Controller [8086:06e0]

IOMMU Group 5:

00:17.0 SATA controller [0106]: Intel Corporation Device [8086:06d2]

IOMMU Group 6:

00:1b.0 PCI bridge [0604]: Intel Corporation Device [8086:06c2] (rev f0)

IOMMU Group 7:

00:1f.0 ISA bridge [0601]: Intel Corporation Device [8086:0685]

00:1f.4 SMBus [0c05]: Intel Corporation Comet Lake PCH SMBus Controller [8086:06a3]

00:1f.5 Serial bus controller [0c80]: Intel Corporation Comet Lake PCH SPI Controller [8086:06a4]

00:1f.6 Ethernet controller [0200]: Intel Corporation Ethernet Connection (11) I219-V [8086:0d4d]

IOMMU Group 8:

01:00.0 VGA compatible controller [0300]: Advanced Micro Devices, Inc. [AMD/ATI] Baffin [Radeon RX 550 640SP / RX 560/560X] [1002:67ff] (rev cf)

IOMMU Group 9:

01:00.1 Audio device [0403]: Advanced Micro Devices, Inc. [AMD/ATI] Baffin HDMI/DP Audio [Radeon RX 550 640SP / RX 560/560X] [1002:aae0]

IOMMU Group 10:

02:00.0 Multimedia audio controller [0401]: Xilinx Corporation RME Hammerfall DSP [10ee:3fc5] (rev 3c)

I finally made it.

From what i understood proxmox was still using the GPU and not making it available to the VM.

I enabled the onboard intel GPU and set it in the bios as the primary GPU, then booted proxmox.

Started the VM and it worked.

The VM sees an extra monitor though, which i don’t know what that monitor is. Is it the intel GPU (i doubt it because Monterey needs a GPU with Metal support).

Nah, it will be the emulated vga. Set display: none in your VM config.

I’m having an odd issue with audio, and i am not sure if there is a cure for it.

Passing through the PCI-e RME audio interface is found and looks ok, by the RME drivers on Monterey.

The problem is that it produces audio for 2 seconds and then it stops. No matter what, it will not produce any more audio. Will have to completely restart the machine to produce these 2 seconds of audio again.

To eliminate the possibility that this is an issue with Monterey and RME drivers, i installed Monterey straight on an HD and OC, and tried it. It works fine. Therefore, the issue occurs only when passing it through proxmox to the VM.

I found this post https://forum.proxmox.com/threads/odd-passthrough-behavior-audio.100358/

Any ideas on how to go about this would be greatly appreciated!

I would check host dmesg output for complaints about vfio and interrupts, there might be a clue there.

Indeed Nick.

A couple of seconds i play a test sound this appears on the main pve monitor

irq18: nobody cared (try booting with the irqpoll option)

and in the shell

root@pve:~#

Message from syslogd@pve at Apr 2 13:55:23 …

kernel:[ 125.843550] Disabling IRQ #18

I did

root@pve:~# lspci -nnks ’02:00.0′

02:00.0 Multimedia audio controller [0401]: Xilinx Corporation RME Hammerfall DSP [10ee:3fc5] (rev 3c)

Subsystem: Xilinx Corporation RME Hammerfall DSP [10ee:0000]

Kernel driver in use: vfio-pci

Kernel modules: snd_hdsp

root@pve:~# lsmod | grep snd_hdsp

snd_hdsp 69632 0

snd_rawmidi 36864 2 snd_usbmidi_lib,snd_hdsp

snd_hwdep 16384 3 snd_usb_audio,snd_hda_codec,snd_hdsp

snd_pcm 118784 5 snd_hda_intel,snd_usb_audio,snd_hda_codec,snd_hdsp,snd_hda_core

snd 94208 10 snd_seq_device,snd_hwdep,snd_hda_intel,snd_usb_audio,snd_usbmidi_lib,snd_hda_codec,snd_hdsp,snd_timer,snd_pcm,snd_rawmidi

and added 10ee:0000 to

nano /etc/modprobe.d/vfio.conf

options vfio-pci ids=1002:67ff,1002:aae0,10ee:3fc5,10ee:0000 disable_vga=1

also added this option in a new line:

softdep mpt3sas pre: vfio_pci

update-initramfs -u

shutdown -r now

I then tried

echo “options snd-hda-intel enable_msi=1” >> /etc/modprobe.d/snd-hda-intel.conf

and see the following when lspci -vv

02:00.0 Multimedia audio controller: Xilinx Corporation RME Hammerfall DSP (rev 3c)

Subsystem: Xilinx Corporation RME Hammerfall DSP

Control: I/O- Mem+ BusMaster- SpecCycle- MemWINV- VGASnoop- ParErr- Stepping- SERR- FastB2B- DisINTx-

Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- SERR- <PERR- INTx-

Interrupt: pin A routed to IRQ 18

IOMMU group: 11

Region 0: Memory at c2100000 (32-bit, non-prefetchable) [size=64K]

Capabilities: [40] Power Management version 3

Flags: PMEClk- DSI- D1- D2- AuxCurrent=0mA PME(D0-,D1-,D2-,D3hot-,D3cold-)

Status: D3 NoSoftRst+ PME-Enable- DSel=0 DScale=1 PME-

Capabilities: [48] MSI: Enable- Count=1/1 Maskable- 64bit+

Address: 0000000000000000 Data: 0000

Capabilities: [58] Express (v1) Endpoint, MSI 00

DevCap: MaxPayload 128 bytes, PhantFunc 0, Latency L0s <64ns, L1 <1us

ExtTag- AttnBtn- AttnInd- PwrInd- RBE+ FLReset- SlotPowerLimit 10.000W

DevCtl: CorrErr- NonFatalErr- FatalErr- UnsupReq-

RlxdOrd+ ExtTag- PhantFunc- AuxPwr- NoSnoop+

MaxPayload 128 bytes, MaxReadReq 512 bytes

DevSta: CorrErr- NonFatalErr- FatalErr- UnsupReq- AuxPwr- TransPend-

LnkCap: Port #0, Speed 2.5GT/s, Width x1, ASPM L0s, Exit Latency L0s <4us

ClockPM- Surprise- LLActRep- BwNot- ASPMOptComp-

LnkCtl: ASPM Disabled; RCB 64 bytes, Disabled- CommClk-

ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt-

LnkSta: Speed 2.5GT/s (ok), Width x1 (ok)

TrErr- Train- SlotClk- DLActive- BWMgmt- ABWMgmt-

Kernel driver in use: vfio-pci

Kernel modules: snd_hdsp

Last i did add the irqpoll option

nano /etc/default/grub

GRUB_CMDLINE_LINUX_DEFAULT="quiet intel_iommu=on iommu=pt irqpoll video=vesafb:off,efifb:off pci=nommconf pcie_acs_override=downstream,mutifunction"

update-grub

shutdown -r now

but nothing has changed. It plays the audio for a couple of seconds and then it stops.

It seems that the Interrupt: pin A routed to IRQ 18 on IOMMU group 11 which is the sound card, get's disabled?

Howdy Nick! I hope all is well with you. I wanted to ping you as I’ve followed a lot of your instructions before and you have a similar config. My RX 580 passthrough blew up today as I upgraded to 7.2 with a 5.15 kernel (vfio-pci 0000:18:00.0: BAR 0: can’t reserve).

I’ve spent several hours now trying various things such as video=simplefb:off, vendor-reset with echo ‘device_specific’ >”/sys/bus/pci/devices/0000:18:00.0/reset_method” with no luck. The vendor reset actually gets me to a bootable OS, but with no GPU support.

My POS Tyan S7103WGM2NR doesn’t have a working onboard v. offboard BIOS setting.

Have you updated your rig to 7.2/5.15? Working? Fixes? Thanks so much!

I haven’t updated to the .15 kernel yet here. Check dmesg for further errors about BAR 0 which specify the address range that is impacted, then you can look up that address range in the output of “cat /proc/iomem” to see which host driver is actually holding the card hostage. It might be something unexpected.

I’ll have to get on that later this week. I gave up shortly after that post and pinned the older kernel using “proxmox-boot-tool kernel pin 5.13.19-6-pve” Everything just started working again and the family stopped complaining.

Hi Nick,

I’m having problems passing through my AMD GPU (Radeon Pro WX 4100) to my Monterey VM (set up following your guide).

I have two PVE systems, one Intel(i7 5960X) and one AMD(Threadripper 3960x)

On the Intel system I can pass through the GPU correctly and it works. No vbios file is needed.

On the AMD system I can pass through the GPU and the OpenCore options display correctly, but when I select MacOS I get an Apple logo with no progress bar. If I enable a vmware display I can boot Monterey and see the AMD GPU in System Information but no driver is loaded.

Also on the AMD system, I can pass through the AMD GPU successfully to Windows or Linux VMs. The issue only occurs on my Monterey VM.

Do you have any pointers as to how I could troubleshoot this issue?

Thank you!

In your host UEFI settings turn off Resizable BAR, and try toggling Above 4G Decoding from whatever it is currently set to.

Thanks Nick, that worked!

It’s great to finally have an accelerated desktop.

The obvious next question is, is there a way to make it work with Resizable BAR on?

I have my Linux and Windows 11 VMs running with Resizable BAR using the argument -fw_cfg name=opt/ovmf/X-PciMmio64Mb,string=65536

Do you know why this doesn’t seem to work for macOS?

Thanks again.

Hi Nick,

Thank you for the tutorials! They’ve been easy to follow & have got me set up relatively quickly & successfully.

However, I’m now stuck at the GPU passthrough stage, I can’t get it to work at all. I’ve got a 5800X & 6900XT on an X570 motherboard. I’ve tried with Resizable BAR & Above 4G Decoding on/off, it always gets to the bootscreen, displays a Bar 0 error & then flickers off after loads of numbers scroll by, only to restart & display a completely white screen.

I’ve blacklisted the AMD drivers in host, put the various tweaks in grub/systemd-boot, verified IOMMU is working with “dmesg | grep -e DMAR -e IOMMU”, ensured “video=efifb:off” is in kernel, & tried various permutations to make progress.

I also tried to google the various errors, but had pretty limited results, so I’m probably searcing the wrong thing.

Do you have any suggestions that could point me in the right direction?

Thank you

Resizable BAR definitely has to be off for macOS guests.

Do you mean it’s panicking in Proxmox or in macOS?

I would focus your efforts on the 6900XT because that one doesn’t suffer from the AMD Reset Bug.

Hi,

Tried all your suggestions for GPU passthrough and still getting reboots when macOS starts. What I realised that the host needs to be told to release the video card completely

The following commands I run after the host starts and does the trick

# Remove the RX580 card (video and audio) from the host, so # passthrough to macOS can work

echo 1 > /sys/bus/pci/devices/0000:01:00.0/remove

echo 1 > /sys/bus/pci/devices/0000:01:00.1/remove

echo 1 > /sys/bus/pci/rescan

I have tried everything to get my RX580 working with my monterey VM. I have followed your guide to create the VM and this one for GPU, also the PCI passthrough. My host machine only has this GPU, no igpu. I have disabled the host from using GPU. My VM will boot but I can’t remote VNC unless I also have display=vmcompatable to standard VGA set. I can see the display device in the system report on mac os but I can’t seem to get the system to use it. Help, please.

I’m having the same issue with an Radeon Sapphire 7950. I can’t vnc unless display is vnccompatible

I am getting ready to switch over to Proxmox from legacy hackintosh install. I want to test everything out before I purchase new hardware or decommission my current hackintosh. For this reason, I am running Proxmox inside VMware Fusion. I have read this should not be an issue. I have it installed and all went well. I have hypervisor enabled in the VM in case that matters. The tutorial works great for me until I get to the point where I am booting in OpenCore for the first time to install. When I try to start the VM, I always get this error and have been unable to get around it:

kvm: error: failed to set MSR 0x48f to 0x7fffff00036dfb

kvm: ../target/i386/kvm/kvm.c:2893: kvm_buf_set_msrs: Assertion `ret == cpu->kvm_msr_buf->nmsrs’ failed.

TASK ERROR: start failed: QEMU exited with code 1

I was able to install Windows 10 in this environment with no problems.

Any ideas would be appreciated.

I think that’s an MSR for VMX, which suggests VMWare is advertising support for VMX to the guest that it cannot actually provide.

I would install Proxmox on a separate disk and boot your computer using that bare metal instead.

Hi Nick,

As always, you are a legend! Thank you for all your time helping people around the globe.

I have two issues I can’t figure out by myself and would appreciate if you could point me in a direction for it.